RoboGSim: A Real2Sim2Real Robotic Gaussian Splatting Simulator

Xinhai Li1*,

Jialin Li2*,

Ziheng Zhang3†,

Rui Zhang4,

Fan Jia3,

Tiancai Wang3,

Haoqiang Fan3,

Kuo-Kun Tseng1‡,

Ruiping Wang2‡,

1Harbin Institute of Technology, Shenzhen

3MEGVII Technology

4Zhejiang University

2Institute of Computing Technology, Chinese Academy of Sciences

*Equal Contribution

†Project Lead

‡Corresponding Author

lixinhai a/t stu.hit.edu.cn {lijialin24s, wangruiping} a/t ict.ac.cn

{zhangziheng, wangtiancai} a/t megvii.com kktseng a/t hit.edu.cn

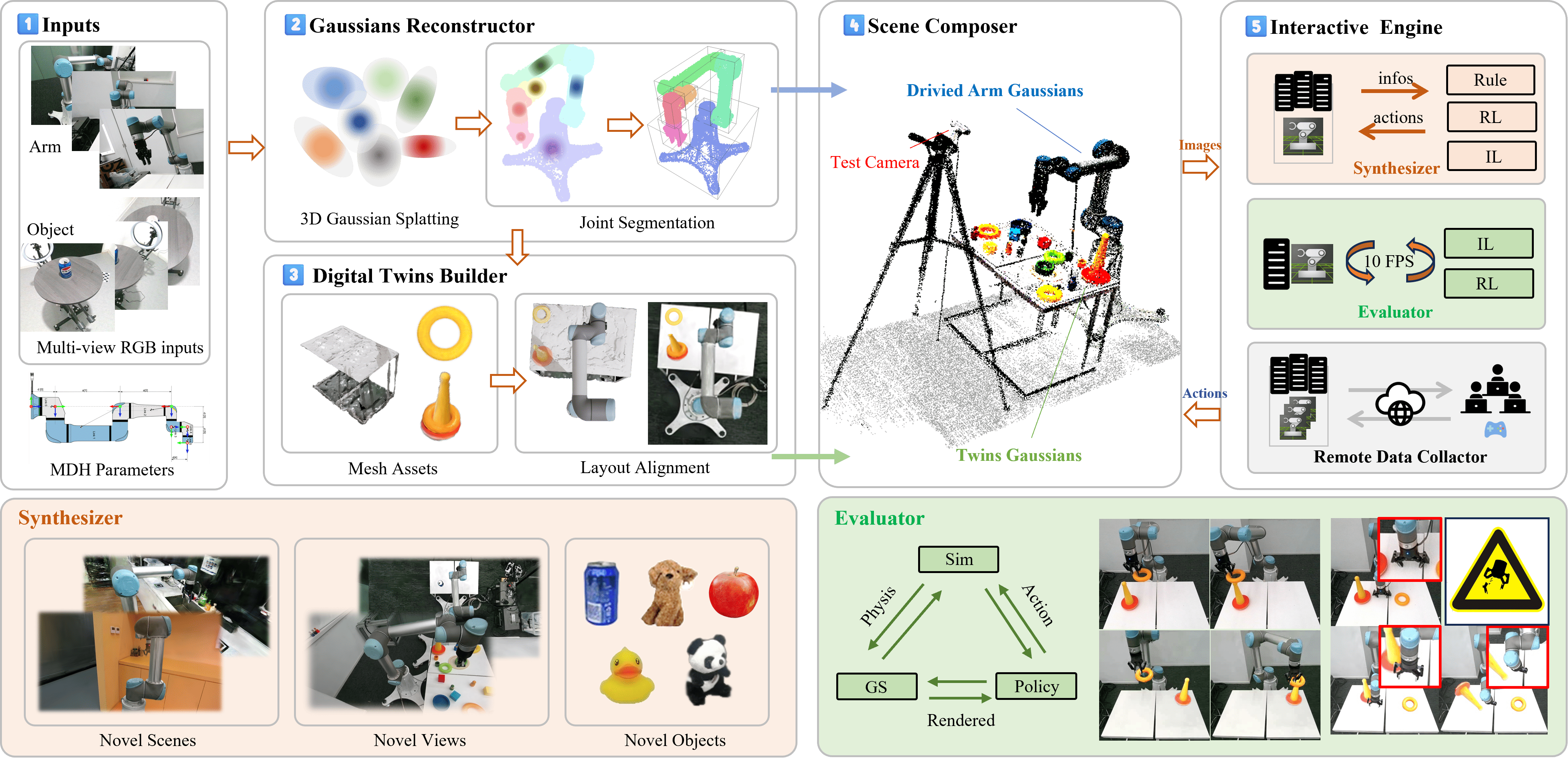

Overview of the RoboGSim Pipeline: (1) Inputs: multi-view RGB image sequences and MDH parameters of the robotic arm. (2) Gaussian Reconstructor: reconstruct the scene and objects using 3DGS, segment the robotic arm and build an MDH kinematic drive graph structure for accurate arm motion modeling. (3) Digital Twins Builder: perform mesh reconstruction of both the scene and objects, then create a digital twin in Isaac Sim, ensuring high fidelity in simulation. (4) Scene Composer: combine the robotic arm and objects in the simulation, identify optimal test viewpoints using tracking, and render images from new perspectives. (5) Interactive Engine: (i) The synthesized images with novel scenes/views/objects are used for policy learning. (ii) Policy networks can be evaluated in a close-loop manner. (iii) The embodied data can be collected by the VR/Xbox equipment.

Overview of the RoboGSim Pipeline: (1) Inputs: multi-view RGB image sequences and MDH parameters of the robotic arm. (2) Gaussian Reconstructor: reconstruct the scene and objects using 3DGS, segment the robotic arm and build an MDH kinematic drive graph structure for accurate arm motion modeling. (3) Digital Twins Builder: perform mesh reconstruction of both the scene and objects, then create a digital twin in Isaac Sim, ensuring high fidelity in simulation. (4) Scene Composer: combine the robotic arm and objects in the simulation, identify optimal test viewpoints using tracking, and render images from new perspectives. (5) Interactive Engine: (i) The synthesized images with novel scenes/views/objects are used for policy learning. (ii) Policy networks can be evaluated in a close-loop manner. (iii) The embodied data can be collected by the VR/Xbox equipment.

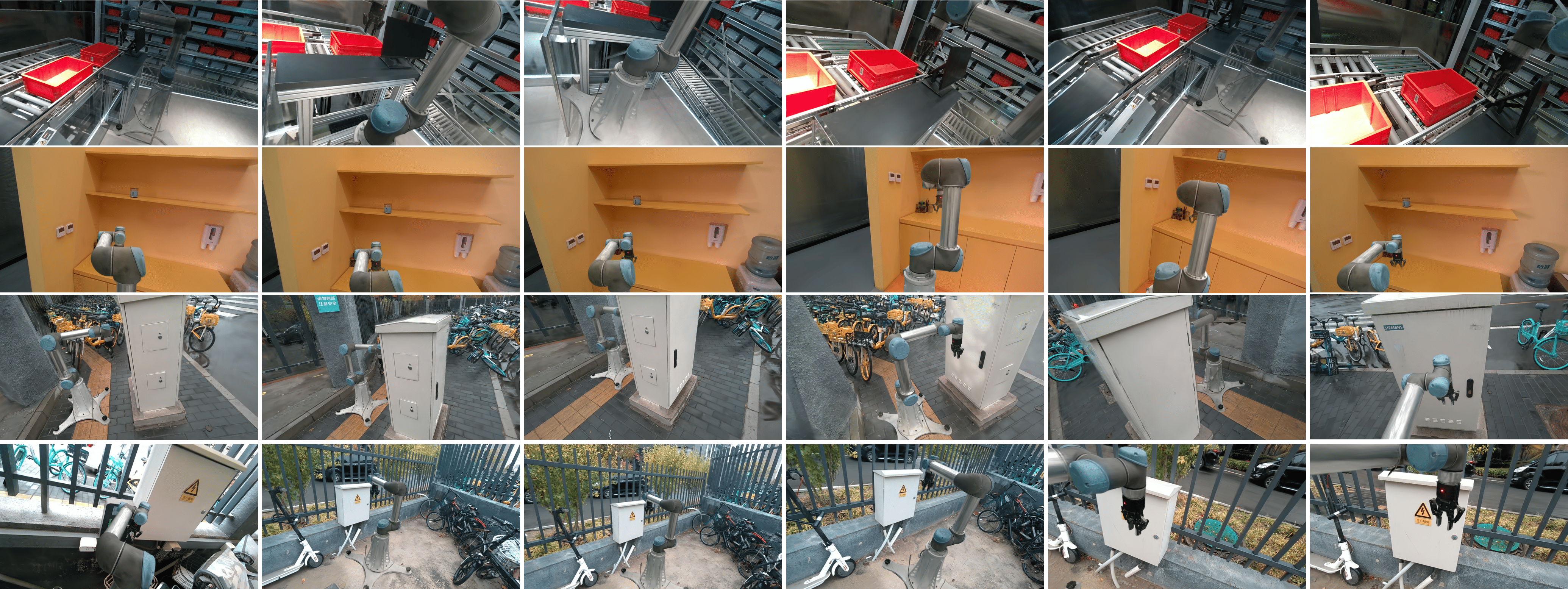

Novel Scene Synthesis: We show the results of the physical migration of the robot arm to new scenes, including a factory, a shelf, and two outdoor environments. The high-fidelity multi-view renderings demonstrate that RoboGSim enables the robot arm to operate seamlessly across diverse scenes.

Novel Scene Synthesis: We show the results of the physical migration of the robot arm to new scenes, including a factory, a shelf, and two outdoor environments. The high-fidelity multi-view renderings demonstrate that RoboGSim enables the robot arm to operate seamlessly across diverse scenes.

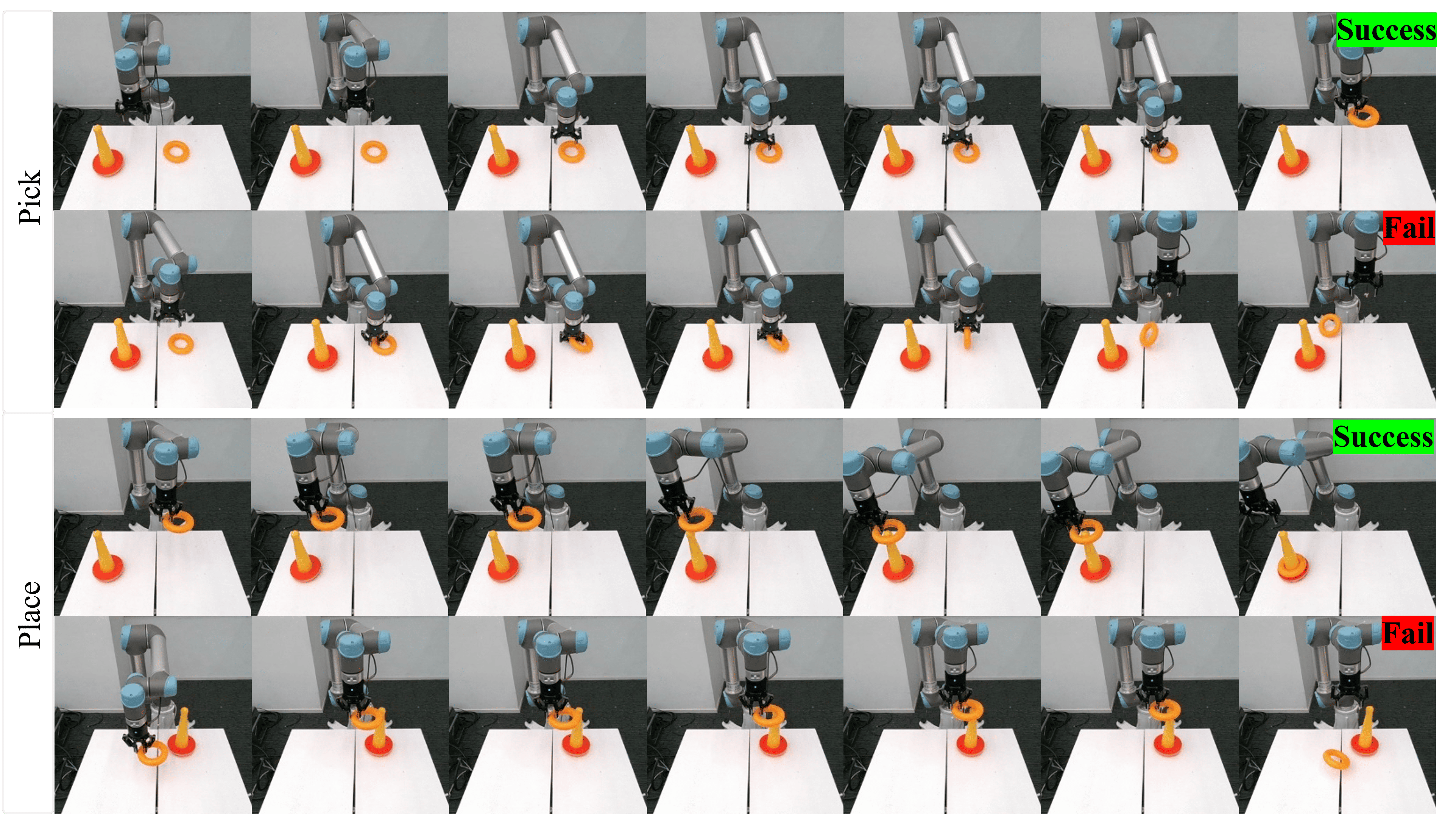

RoboGSim as Synthesizer: The first two rows show real robot videos captured from the test viewpoint, illustrating successful and failed cases of the VLA model on the Pick task. The last two rows display real robot videos captured from the test viewpoint, showing successful and failed cases of the VLA model on the Place task.

RoboGSim as Synthesizer: The first two rows show real robot videos captured from the test viewpoint, illustrating successful and failed cases of the VLA model on the Pick task. The last two rows display real robot videos captured from the test viewpoint, showing successful and failed cases of the VLA model on the Place task.

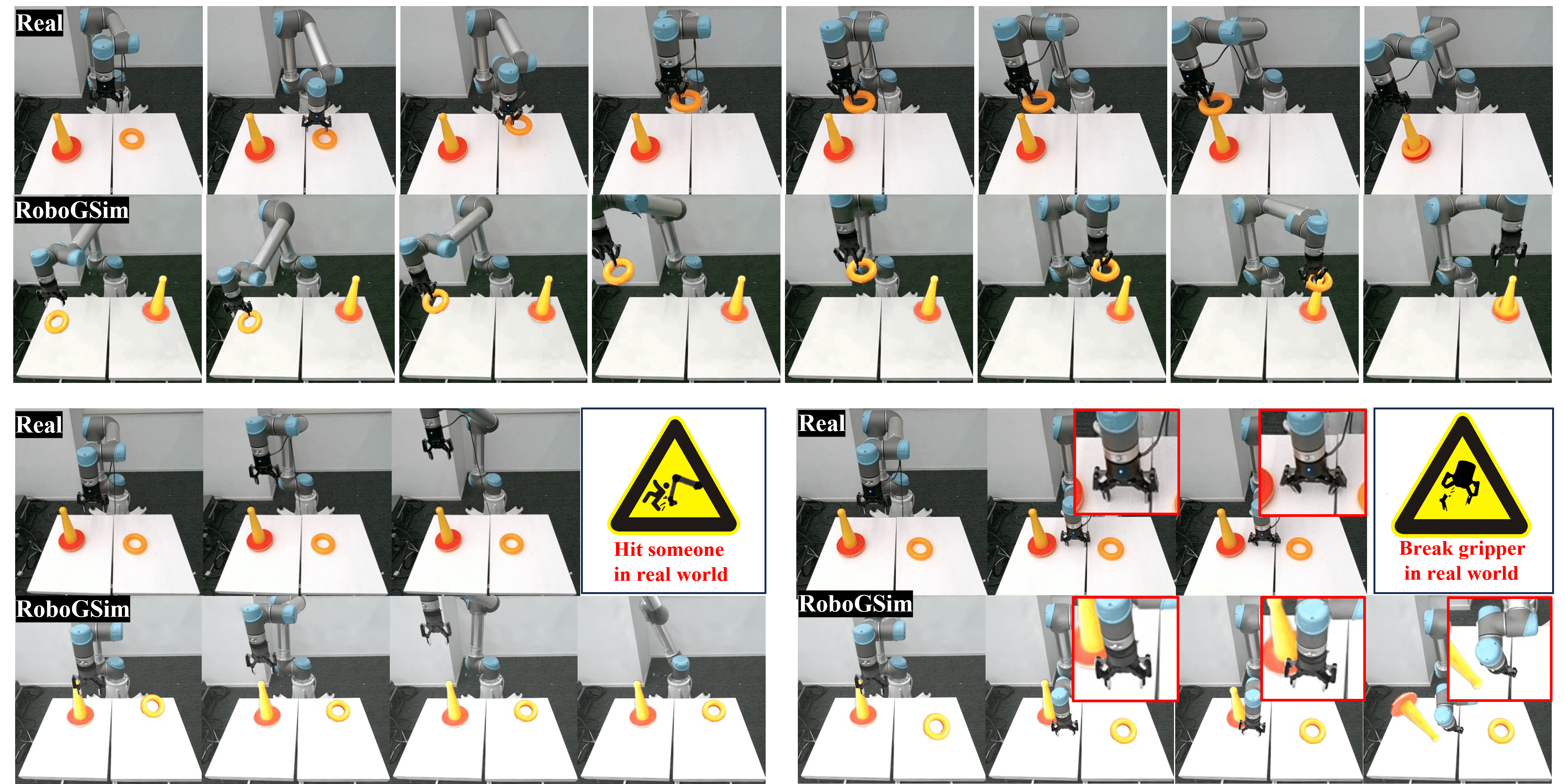

RoboGSim as Evaluator: The first two rows, labeled "Real" and "RoboGSim", show the footage captured from the real robot and RoboGSim, respectively. They are both driven by the trajectory generated by the same VLA network. In the third row, the left side shows the real-world inference where the robot arm exceeds its operational limits, resulting in a manual shutdown. The right side shows an instance where a wrong decision from the VLA network, causes the robotic arm to collide with the table. The fourth row presents the simulation results from RoboGSim, which can avoid dangerous collisions.

RoboGSim as Evaluator: The first two rows, labeled "Real" and "RoboGSim", show the footage captured from the real robot and RoboGSim, respectively. They are both driven by the trajectory generated by the same VLA network. In the third row, the left side shows the real-world inference where the robot arm exceeds its operational limits, resulting in a manual shutdown. The right side shows an instance where a wrong decision from the VLA network, causes the robotic arm to collide with the table. The fourth row presents the simulation results from RoboGSim, which can avoid dangerous collisions.